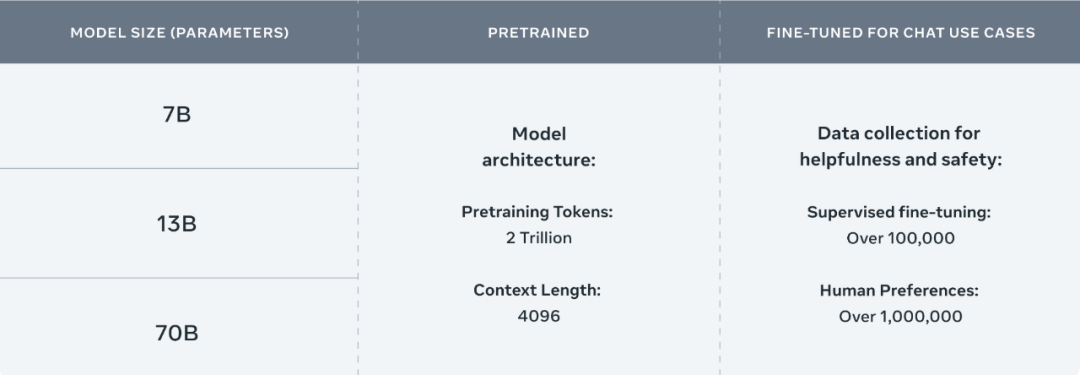

llama2 使用权重训练脚本

You can follow the steps below to quickly get up and running with Llama 2 models. These steps will let you run quick inference locally. For more examples, see the Llama 2 recipes repository.

- In a conda env with PyTorch / CUDA available clone and download this repository.

- In the top-level directory run:

pip install -e . - Visit the Meta website and register to download the model/s.

- Once registered, you will get an email with a URL to download the models. You will need this URL when you run the download.sh script.

- Once you get the email, navigate to your downloaded llama repository and run the download.sh script.

- Make sure to grant execution permissions to the download.sh script

- During this process, you will be prompted to enter the URL from the email.

- Do not use the “Copy Link” option but rather make sure to manually copy the link from the email.

- Once the model/s you want have been downloaded, you can run the model locally using the command below:

torchrun --nproc_per_node 1 example_chat_completion.py \

--ckpt_dir llama-2-7b-chat/ \

--tokenizer_path tokenizer.model \

--max_seq_len 512 --max_batch_size 6

Note

- Replace

llama-2-7b-chat/with the path to your checkpoint directory andtokenizer.modelwith the path to your tokenizer model. - The

–nproc_per_nodeshould be set to the MP value for the model you are using. - Adjust the

max_seq_lenandmax_batch_sizeparameters as needed. - This example runs the example_chat_completion.py found in this repository but you can change that to a different .py file.

# 句子补全

torchrun –nproc_per_node 1 example_text_completion.py \ –ckpt_dir llama-2-7b/ \ –tokenizer_path tokenizer.model \ –max_seq_len 128 –max_batch_size 4

# 对话生成

torchrun –nproc_per_node 1 example_chat_completion.py \ –ckpt_dir llama-2-7b-chat/ \ –tokenizer_path tokenizer.model \ –max_seq_len 512 –max_batch_size 4

torchrun –nproc_per_node 1 ./example_text_completion.py –ckpt_dir ../models/llama-2-7b/ –tokenizer_path ../models/llama-2-7b/tokenizer.model –max_seq_len 512 –max_batch_size 6

最低配:

torchrun –nproc_per_node 1 example_text_completion.py –ckpt_dir llama-2-7b/ –tokenizer_path tokenizer.model –max_seq_len 64 –max_batch_size 2

ln -h ./llama-2-7b-tokenizer.model ./llama-2-7b/tokenizer.model

脚本说明:

torchrun: PyTorch的分布式启动工具,用于启动分布式训练

–nproc_per_node 1: 每个节点上使用1个进程

example_text_completion.py: 要运行的训练脚本

–ckpt_dir llama-2-7b/: 检查点保存目录,这里是llama-2-7b,即加载Llama 7B模型

–tokenizer_path tokenizer.model: 分词器路径

–max_seq_len 512: 最大序列长度

–max_batch_size 6: 最大批大小

扫码领红包 微信赞赏

微信赞赏 支付宝扫码领红包

支付宝扫码领红包